Commentary on Nvidia Q1 FY 25

10-to-1 stock split with no air pocket in sight

Another $2bn beat on guidance for the 3rd quarter

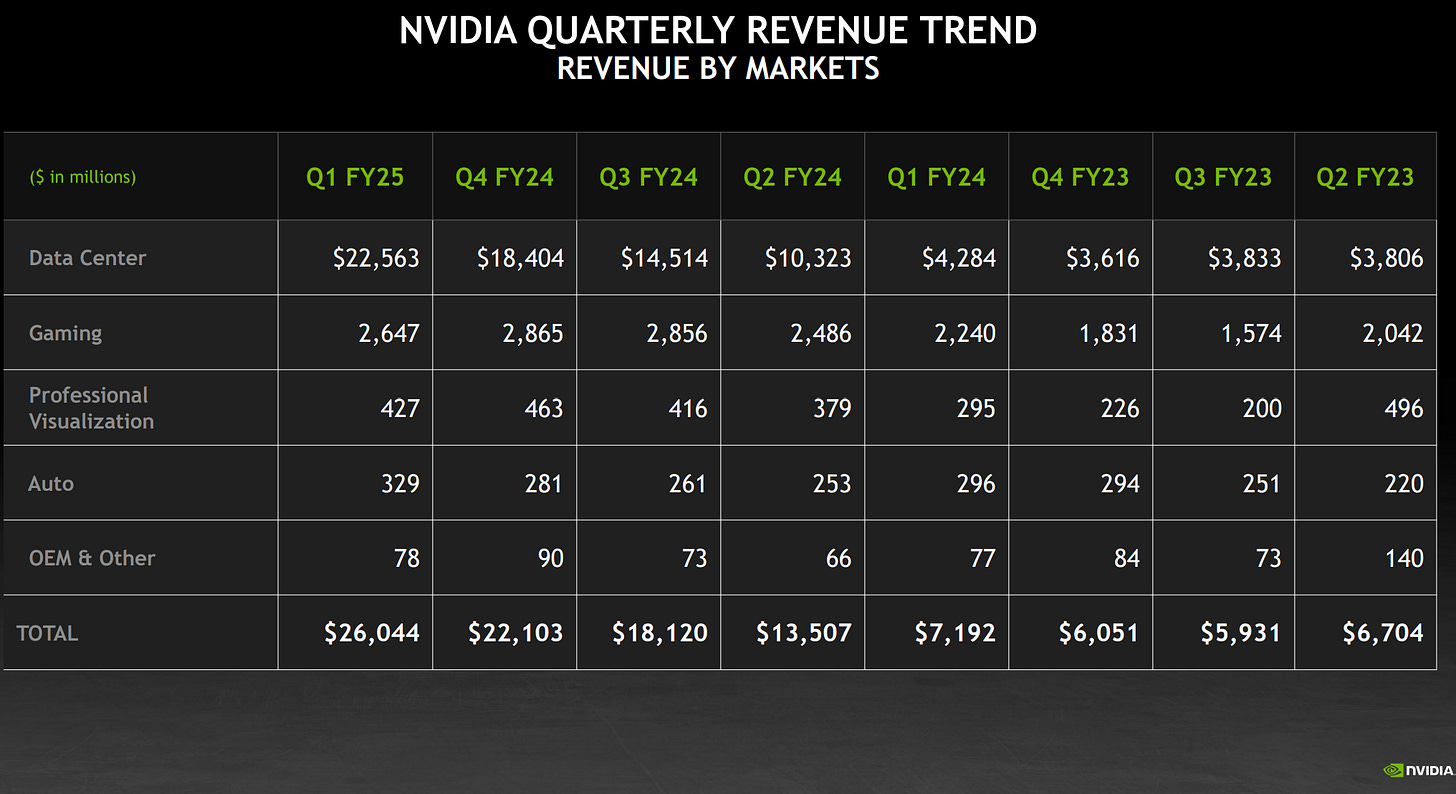

Source: Nvidia

$28 billion revenue guidance for Q2

Maybe a beat of another $2 billion again with the ramp of H200

10 to 1 stock split is all that matters for the stock today

In Q1, we returned $7.8 billion to shareholders in the form of share repurchases and cash dividends. Today, we announced a 10-for-1 split of our shares with June 10 as the first day of trading on a split adjusted basis. We are also increasing our dividend by 150%.

Air pocket from hyperscalers filled by enterprise and consumer internet

Hyperscalers went from >50% of Data Center revenue to mid 40s. Hyperscalers are waiting for Blackwell, but enterprise and consumer Internet companies filled the gap. Positive for Supermicro over the next 2 quarters

Strong sequential Data Center growth was driven by all customer types, led by enterprise and consumer Internet companies. Large cloud providers continue to drive strong growth as they deploy and ramp NVIDIA AI infrastructure at scale and represented the mid-40s as a percentage of our Data Center revenue.

Demand for GPU is coming from both Training and Inference

In our trailing 4 quarters, we estimate that inference drove about 40% of our Data Center revenue. Both training and inference are growing significantly

Sovereign AI demand quantified

From nothing the previous year, we believe sovereign AI revenue can approach the high single-digit billions this year. The importance of AI has caught the attention of every nation.

Demand continues to exceed supply into 2025

While supply for H100 grew, we are still constrained on H200. At the same time, Blackwell is in full production. We are working to bring up our system and cloud partners for global availability later this year. Demand for H200 and Blackwell is well ahead of supply, and we expect demand may exceed supply well into next year.

Networking revenue disclosed

Source: Nvidia

Production of B100 has ramped at TSMC and Nvidia will recognise Blackwell revenue this year

Few more months before B100 will be packaged at TSMC. Is Samsung even qualified for HBM3e yet?

But our production shipments will start in Q2 and ramp in Q3, and customers should have data centers stood up in Q4.

Nvidia is providing a system solution, not just a chip

The third reason has to do with the fact that we build AI factories. And this is becoming more apparent to people that AI is not a chip problem only. It starts, of course, with very good chips and we build a whole bunch of chips for our AI factories, but it's a systems problem. In fact, even AI is now a systems problem. It's not just one large language model. It's a complex system of a whole bunch of large language models that are working together. And so the fact that NVIDIA builds this system causes us to optimize all of our chips to work together as a system, to be able to have software that operates as a system, and to be able to optimize across the system.

The first comment that you made is really a great comment, which is how is it that we're moving so fast and advancing them quickly, because we have all the stacks here. We literally build the entire data center and we can monitor everything, measure everything, optimize across everything. We know where all the bottlenecks are.

Highest performance is lowest TCO when everything else is a constraint. In-house custom chips won’t be able to iterate faster

in simple numbers, if you had a $5 billion infrastructure and you improved the performance by a factor of 2, which we routinely do, when you improve the infrastructure by a factor of 2, the value to you is $5 billion. All the chips in that data center doesn't pay for it. And so the value of it is really quite extraordinary. And this is the reason why today, performance matters in everything. This is at a time when the highest performance is also the lowest cost because the infrastructure cost of carrying all of these chips cost a lot of money. And it takes a lot of money to fund the data center, to operate the data center, the people that goes along with it, the power that goes along with it, the real estate that goes along with it, and all of it adds up. And so the highest performance is also the lowest TCO.

China revenue is derisked

It is the case that our business in China is substantially lower than the levels of the past. And it's a lot more competitive in China now because of the limitations on our technology. And so those matters are true.

100 different computer system configurations are positive for the ODMs

We disaggregate all of the components that make sense and we integrate it into computer makers. We have 100 different computer system configurations that are coming this year for Blackwell. And that is off the charts. Hopper, frankly, had only half, but that's at its peak. It started out with way less than that even. And so you're going to see liquid-cooled version, air-cooled version, x86 versions, Grace versions, so on and so forth. There's a whole bunch of systems that are being designed. And they're offered from all of our ecosystem of great partners. Nothing has really changed

R100 is coming at the end of 2025

I can announce that after Blackwell, there's another chip. And we are on a 1-year rhythm. And you can also count on us having new networking technology on a very fast rhythm. We're announcing Spectrum-X for Ethernet. But we're all in on Ethernet, and we have a really exciting road map coming for Ethernet. We have a rich ecosystem of partners.